Patroni PostgreSQL Cluster With HAProxy

Posted on Sat 26 February 2022 in Linux-Open-Source

Patroni PostgreSQL Cluster

Important

High Availability PostgreSQL with Patroni and HAproxy Cluster

- Patroni is a tool for setting up PostgreSQL servers in high-availability configuration steps. It is written in Python and available on PyPi.

- The proposed solution addresses the failover problem when one of the nodes fails, but it does not address load balancing when multiple requests to the PostgreSQL database are made.

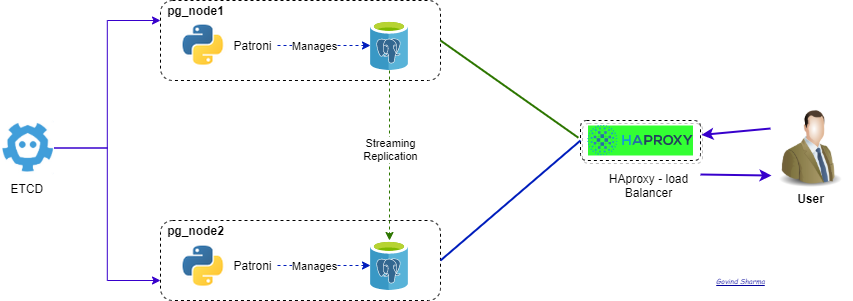

Patroni architecture

|

Prerequisites

- Patroni - Postgresql operation orchestrator: it supports bootstrapping, automatic failover, streaming replication.

- Etcd – Manages the cluster: an open-source distributed key-value store providing a hub for cluster coordination and state management. It handles leader elections during network partitions and it can tolerate machine failure.

- HAProxy – Load Balancer: it offers load balancing and proxying for TCP and HTTP based applications.

Installation

Note

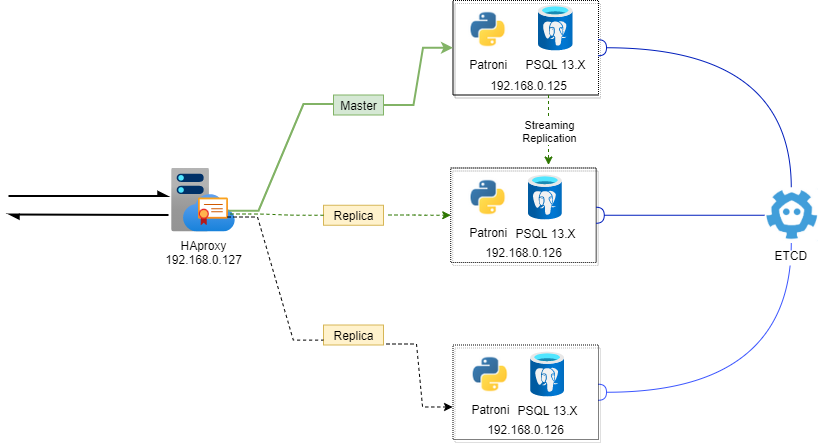

pg_node1 - 192.168.0.125, pg_node2 - 192.168.0.126, pg_node3 - 192.168.0.129

ETCD - 192.168.0.127

HAproxy_node1 - 192.168.0.127

Diagram

Install PostgreSQL

Enable PostgreSQL13 module on pg_node1 or pg_node2 or pg_node3

dnf -qy module disable postgresql #Disable defualt repo

dnf -qy module enable postgresql:13 #Enable postgresql 13 repo

Install PostgreSQL on pg_node1 or pg_node2 or pg_node3

dnf -y install postgresql postgresql-server postgresql-libs postgresql-odbc postgresql-contrib

Install Patroni

Patroni is a cluster manager used to customize and automate deployment and maintenance of PostgreSQL HA (High Availability) clusters.

- on pg_node1 or pg_node2 or pg_node3

dnf -y install yum-utils

Install pgdg-redhat-repo-latest

dnf -y install https://download.postgresql.org/pub/repos/yum/reporpms/EL-8-x86_64/pgdg-redhat-repo-latest.noarch.rpm

- Install patroni

dnf -y install patroni

Note: pip3 install -r requirements.txt may not work in later versions of Patroni. Instead, you will need to install the dependencies like this:

pip3 install patroni[dependencies]

pip3 install patroni[etcd]

#Or

modprobe softdog #for watchdog

Patroni configuration files

- Patroni is invoked with the path to a configuration file. There is a configuration file for each PostgreSQL node. The configuration file is written in .yml format.

configuration patroni for pg_node1 or pg_node3

- Create folder for patroni configuration file.

install -d /etc/patroni/

patroni defualt configuration file name is /etc/patroni/patroni.yml

- vim /etc/patroni/patroni.yml

vim /etc/patroni/patroni.yml

Remove everything from this file, and add the following configuration parameters. Make sure, you change namespace, listen and connect_address to reflect yours.

scope: postgres

namespace: /pg_cluster/

name: pg_node1

restapi:

listen: 192.168.0.125:8008

connect_address: 192.168.0.125:8008

etcd:

host: 192.168.0.125:2379

bootstrap:

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

postgresql:

use_pg_rewind: true

use_slots: true

parameters:

initdb:

- encoding: UTF8

- data-checksums

pg_hba:

- host all all all md5

- host replication replicator 192.168.0.125/24 md5

- host replication replicator 192.168.0.126/24 md5

- host replication replicator 127.0.0.1/32 md5

- host replication replicator 192.168.0.129/24 md5

users:

admin:

password: admin

options:

- createrole

- createdb

postgresql:

listen: 192.168.0.125:5432

connect_address: 192.168.0.125:5432

data_dir: /var/lib/pgsql/data

bin_dir: /usr/bin

pgpass: /tmp/pgpass

authentication:

replication:

username: replicator

password: replicator

superuser:

username: postgres

password: postgres

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

watchdog:

mode: automatic

device: /dev/watchdog

safety_margin: 5

configuration patroni for pg_node2

- Create folder for patroni configuration file.

install -d /etc/patroni/

patroni defualt configuration file name is /etc/patroni/patroni.yml

- vim /etc/patroni/patroni.yml

vim /etc/patroni/patroni.yml

Remove everything from this file, and add the following configuration parameters. Make sure, you change namespace, listen and connect_address to reflect yours.

scope: postgres

namespace: /pg_cluster/

name: pg_node2

restapi:

listen: 192.168.0.126:8008

connect_address: 192.168.0.126:8008

etcd:

host: 192.168.0.127:2379

bootstrap:

dcs:

ttl: 30

loop_wait: 3

retry_timeout: 3

maximum_lag_on_failover: 1048576

postgresql:

use_pg_rewind: true

use_slots: true

parameters:

wal_level: replica

hot_standby: "on"

wal_keep_segments: 400

max_wal_senders: 5

max_replication_slots: 5

checkpoint_timeout: 30

initdb:

- encoding: UTF8

- data-checksums

pg_hba:

- host all all all md5

- host replication replicator 192.168.0.125/24 md5

- host replication replicator 192.168.0.126/24 md5

- host replication replicator 192.168.0.129/24 md5

- host replication replicator 127.0.0.1/32 md5

- host replication replicator 0.0.0.0/32 md5

users:

admin:

password: admin

options:

- createrole

- createdb

postgresql:

listen: 192.168.0.126:5432

connect_address: 192.168.0.126:5432

data_dir: /var/lib/pgsql/data

bin_dir: /usr/bin

pgpass: /tmp/pgpass

authentication:

replication:

username: replicator

password: replicator

superuser:

username: postgres

password: postgres

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

watchdog:

mode: automatic

device: /dev/watchdog

safety_margin: 5

Save and close the editor when you are finished.

Install etcd

Important

etcd is a strongly consistent, distributed key-value store that provides a reliable way to store data that needs to be accessed by a distributed system or cluster of machines. We will use etcd to store the state of the Postgres cluster in order to keep the Postgres cluster up and running.

note Make sure to download the latest version of etcd.

curl -L https://github.com/coreos/etcd/releases/download/v3.3.2/etcd-v3.3.2-linux-amd64.tar.gz -/tmp/etcd-v3.3.2-linux-amd64.tar.gzUnpack the archive:

tar xvf etcd-v3.3.2-linux-amd64Start Etcd:

./etcd&

OR you can install etcd as a service that you will need to start.

Configuration ETCD

vim /etc/etcd/etcd.conf

- Added menmber configuration for Haproxy_node1

[Member]

#ETCD_CORS=""

ETCD_NAME="pg_node1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

ETCD_LISTEN_PEER_URLS="http://192.168.0.125:2380,http://localhost:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.0.127:2379,http://localhost:2379"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.0.125:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.0.127:2379"

ETCD_INITIAL_CLUSTER="pg_node1=http://192.168.0.125:2380,pg_node2=http://192.168.0.126:2380"

ETCD_INITIAL_CLUSTER_TOKEN="pg_node"

ETCD_INITIAL_CLUSTER_STATE="new"

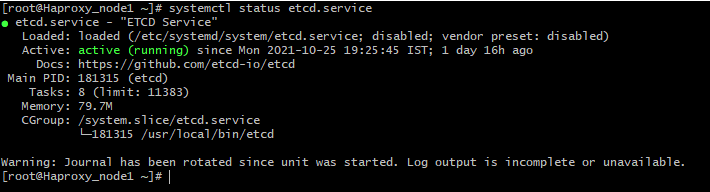

start etcd service

etcd.service

[Unit] Description="ETCD Service" Documentation=https://github.com/etcd-io/etcd After=network.target [Service] Type=notify User=etcd Group=etcd ProtectSystem=full ProtectHome=read-only PrivateTmp=yes PrivateDevices=yes SecureBits=keep-caps AmbientCapabilities=CAP_IPC_LOCK NoNewPrivileges=yes EnvironmentFile=/etc/etcd/etcd.conf ExecStart=/usr/local/bin/etcd Restart=always RestartSec=10s LimitNOFILE=40000 [Install] WantedBy=multi-user.target

systemctl enable --now etct.service

|

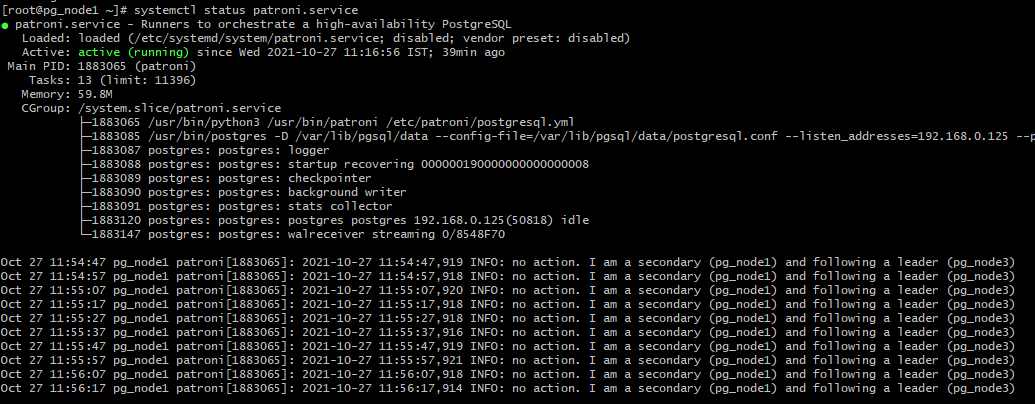

Login pg_node1

- start patroni service

systemctl enable --now patroni.serice

|

Note

ETCD add pg_node2 and pg_node3 member with below command

etcdctl member remove pg_node2 http://192.168.0.126:2380

etcdctl member remove pg_node3 http://192.168.0.129:2380

Check etcd members

etcdctl member list

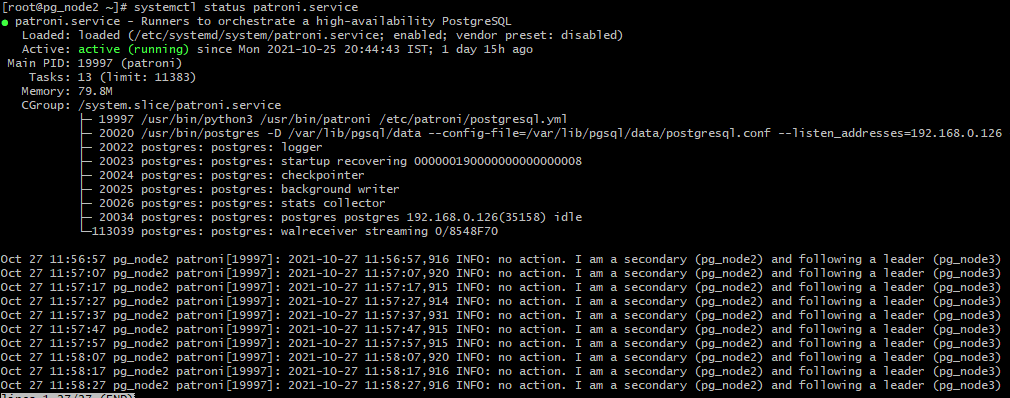

Login pg_node2

- start patroni service

systemctl enable --now patroni.serice

|

Note

same configure on pg_node3.

Install HAProxy

Important

HAProxy is free, open source software that provides a high availability load balancer and proxy server for TCP and HTTP-based applications that spreads requests across multiple servers. HAProxy forwards the connection to whichever node is currently the master. It does this using a REST endpoint that Patroni provides. Patroni ensures that, at any given time, only the master node will appear as online, forcing HAProxy to connect to the correct node.

login HAproxy_node1 and install HAproxy

dnf install haproxy -y

Configure HAProxy

- Login on both node and configure

backup default haproxy.conf file

cp -f /etc/haproxy/haproxy.cfg cp -f /etc/haproxy/haproxy.cfg-orig

Remove everything from this file, and add the following configuration parameters.

#--------------------------------------------------------------------- # Example configuration for a possible web application. See the # full configuration options online. # # https://www.haproxy.org/download/1.8/doc/configuration.txt # #--------------------------------------------------------------------- #--------------------------------------------------------------------- # Global settings #--------------------------------------------------------------------- global log 127.0.0.1 local0 ssl-default-bind-options no-sslv3 tune.ssl.default-dh-param 2048 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon stats socket /var/lib/haproxy/stats defaults mode tcp log global option tcplog option dontlognull option http-server-close #option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s #--------------------------------------------------------------------- # main frontend which proxys to the backends #--------------------------------------------------------------------- listen stats mode http bind *:8089 stats enable stats uri / listen primary mode tcp bind *:5432 timeout client 10800s timeout server 10800s balance leastconn option httpchk OPTIONS /master option allbackups balance leastconn http-check expect status 200 default-server inter 3s fall 3 rise 3 on-marked-down shutdown-sessions server pg_node1 192.168.0.125:5432 maxconn 1000 check port 8008 #check-ssl verify none server pg_node2 192.168.0.126:5432 maxconn 1000 check port 8008 server pg_node2 192.168.0.129:5432 maxconn 1000 check port 8008 listen standbys mode tcp bind *:5433 timeout client 10800s timeout server 10800s balance leastconn option httpchk OPTIONS /replica option allbackups balance leastconn http-check expect status 200 default-server inter 3s fall 3 rise 3 on-marked-down shutdown-sessions server pg_node1 192.168.0.125:5432 maxconn 1000 check port 8008 server pg_node2 192.168.0.126:5432 maxconn 1000 check port 8008 server pg_node2 192.168.0.129:5432 maxconn 1000 check port 8008

Check configuration file with below command;

haproxy -c -V -f /etc/haproxy/haproxy.cfg

If any error correct then start haproxy service.

setsebool -P haproxy_connect_any on systemctl enable --now haproxy.service

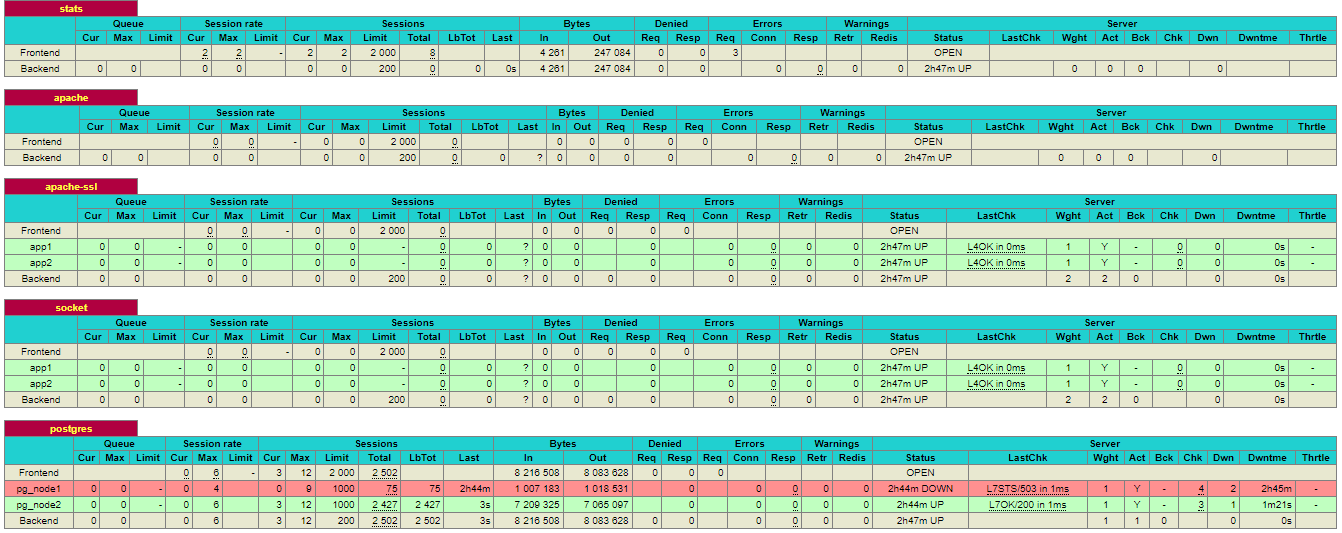

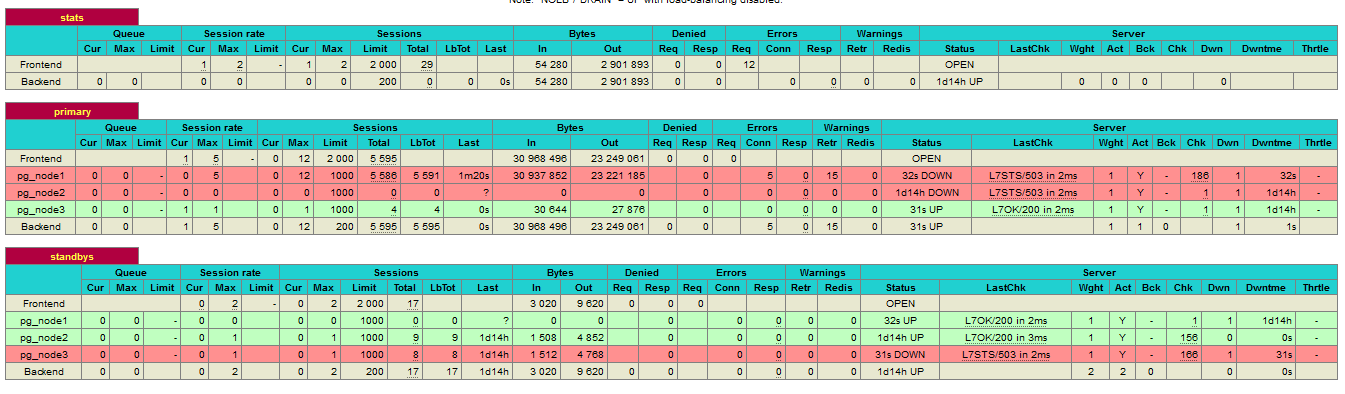

HAproxy Statistics Report;

|

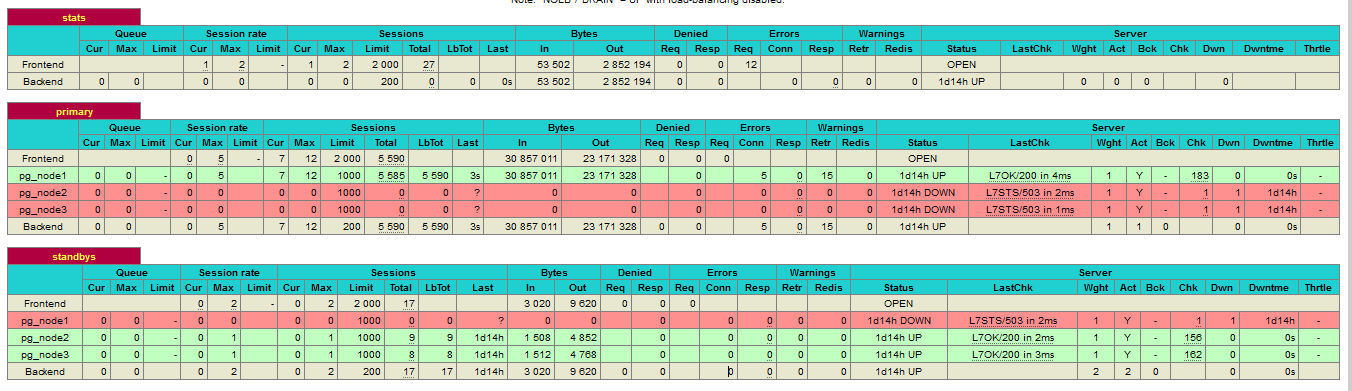

If pg_node2 stop haproxy cluster automatically up pg_node1;

example

|

- pg_node1 is going down!

|

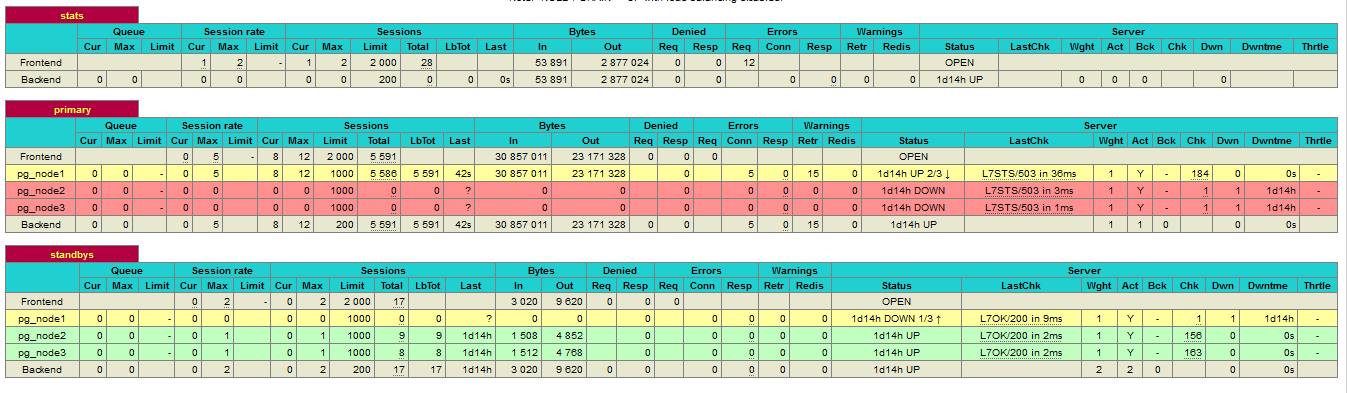

- pg_node3 is going up!

|